Now a day’s a lot of smartphone appliances and devices we see all around us call themselves smart and AI powered what does it really mean every single one of these smart devices learns through algorithms and the large amount of trained and annotated data that has been fed to it. Algorithms are simply the set of rules that are programmed in the machine on which it operates in fact all of these smart assistants like Alexa, Siri, Google Assistant can recognize your speech and come up with a specific answer to your specific questions.

This is happening after observing your usage patterns and behaviors using an artificial neural network which is very much similar to neural network present in the human brains. In fact the very first artificial neurons design is based on a human neurons basic structure that is why neurons are at the core of the learning and thinking process be it in humans or machines.

Let us look at the brief timeline of AI and Machine learning history.

The Concept of Artificial Neurons

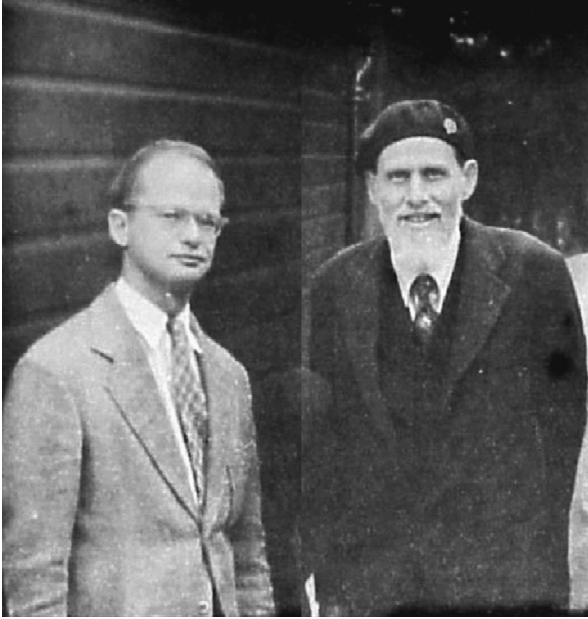

In 1943 a logician and a neurophysiologist came together to put forth an idea of an artificial neuron the great minds of logician Walter Pitts and neurophysiologist Warren Mcculloch published a paper titled A logical calculus of the ideas imminent in the nervous activity. It’s quite a mouthful. In this they demonstrated the first mathematical model of neural networks.

Source: History of Information

Commonly referred to as the Mcculloch-Pitts neuron this model mimics the functionality of a biological neuron. It demonstrates how a mental process like learning takes place with the help of neural connections. It’s important to notice at this time that tits had not received a University degree when he came up with the idea of an artificial neuron. In fact he wasn’t even an official student. He educated himself by assisting many science Scholars such as Rudolph Carnap and Warren Mcculloch himself. The only degree he later received was an Associates of Arts degree offered by the University of Chicago for this very work with Mcculloch as an unofficial student. The model works on Boolean logic that uses the AND, OR, NOT, XOR and XNOR functions (Boolean logic is a system where there are two inputs and one outputs and depending on which one of these inputs is on or off determines what the output is going to be). During these times devices could only perform commands and did not store any kind of data or results for future predictions and although this original model was limited to two types of inputs it was an important step towards what came next.

Practice Made Neuron Perfect

later in 1949 a Canadian psychologist named Donald Hebb theorized about the synaptic connections in a human’s neuron. It was the first time when a psychological learning rule for synaptic change in neuron was introduced that came to be known as the ‘Hebb’s Synapse.’

His theory explained how a neuron can strengthen a learning pattern into a learned behavior or habit when it performs the same task again and again or develops a sustainable thought pattern for a very long time. Essentially he theorized that our brain cells are just like us, they need to do a task repeatedly in a fixed or a mixed pattern in order to learn something. Mcculloch and pits later developed a cell assembly theory in the 1951 paper and their model was then known as Hebbian Theory, which was very much based on Hebb’s previous discovery. Any machine learning model that followed this idea was said to exhibit a Hebbian learning. This theory served as a watershed movement for research in the field of machine learning. By 1950 a more serious effort in this field started to take root to examine whether a machine can generate answers like humans. The British mathematician Alan Turing, yes the one from the imitation game played by Benedict Cumberbatch came up with a Turing Test.

The Turing Test

Alan Turing was a mathematician and computer scientist from London who made an important contribution to machine learning. Turing is already well known for his work during World War II when he upgraded the Polish machine BOMB that more effectively decoded the complex coded messages sent by Germans to their spies in England. post-war he continued to develop his ideas about computer science and published a paper titled ‘Computing Machinery and Intelligence’.

This paper proposed the idea of the imitation game which later became the Turing test a simple test of examining if a machine can think like humans. Alan believed that in about 50 years time it will be possible to program computers with a storage capacity of around 109 megabytes to make them play the imitation game so well that an average interrogator will not have more than 70% chance of making the right identification between a computer and a human response. Wouldn’t you know it by 2014 a Chabot named Eugene Goostman passed the Turing test by convincing 33% of the jury members that he was a 17 year old Ukrainian boy and not a machine. Further in 1951 a cognitive and computer scientist named Marvin Minsky started to work on his idea of creating a learning machine. This machine is considered one of the first pioneering attempts in the field of artificial intelligence.

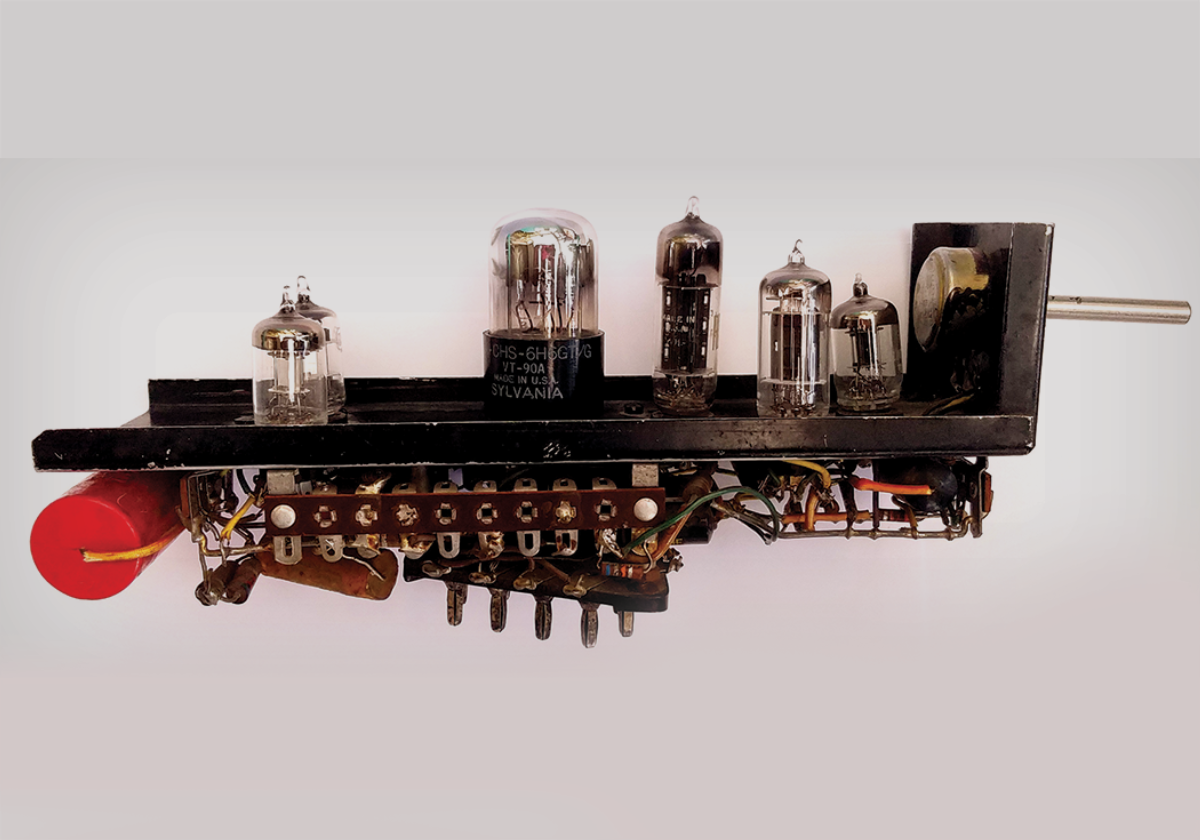

First Artificial Neural Network

As a student Marvin Minsky dreamt of creating a machine that could learn. He wanted to better understand intelligence by recreating it but the machine he imagined had many technical requirements that were very expensive and needed funding. To bring his device Minsky secured funding from the Harvard psychologist George Miller. Therefore with the help of fellow Princeton graduate Dean Edmonds, he created the first artificial neural network called SNARK or Stochastic Neural Analog Reinforcement Calculator. The design included 50 random interconnected neurons that were inspired with the Habbian learning. The main aim was to make the system learn through possessing past memory. To make this happen Minsky and Edmonds tested its learning capabilities by having the machine navigate a virtual maze. Now that’s because a maze problem has multiple Solutions or roots that can take us successfully to the exit. But the best way is the one that takes the least amount of time. Therefore the neurons in SNARK were trained randomly to solve the maze. Every result that gave more information to the neurons about which move was probably correct and which one was probably wrong. Thus, eventually realizing the best route by training itself on multiple combinations neurons developed a pattern and studied their own pattern to come out with the best case solution.

Typically computers were trained to solve solution-based problems but to achieve the next level we needed computers to solve strategic problems. The best way to train them was to teach them to play games as games are very much strategic in nature and the kind of thinking that was considered beneficial as it would give a structure to solve other strategic problems. This was believed by a computer scientist Arthur Samuel who created a computer program that played the checkers game.

The Checkers Game

Arthur Samuel was an American Pioneer in the field of computer gaming and artificial intelligence. He created the world’s first self-learning program that played the checkers game on its own. He chose this game as it was simple and gave him the opportunity to focus more on learning patterns while he was working at IBM he developed this program by implementing the first Alpha Beta pruning algorithm. This algorithm uses the search tree method and Minimax strategy until now computers had gathered the capability to store data and predict output but since it was 1957 they were almost about to learn how to see when an American psychologist named Frank Rosenblatt designed the perceptron.

The Perceptron

Perceptron is a neural network compound of a single artificial neuron. It was designed with biological principles after being inspired by the concept of the Mcculloch-Pitts neuron and the Hebbian theory. This machine was designed for image recognition. The artificial neuron in a perceptron had a number of inputs where each input had a weight associated with it. So, the total input a neuron gets will be the weighted sum of all of the inputs and its related sum exceeds the threshold value the neuron will fire.

“…the perceptron is the embryo of an electronic computer that will eventually walk, talk, see, write, reproduce itself, and be conscious of its own existence..” -New York Times,

Well it does feel a bit too much but again is it really that different from what we see in newspapers nowadays. This model was based on binary classification which means it could distinguish between two classes. However it function under the linear classification but failed in non-linear one. This limitation of perceptron brings us into the next stage of machine learning history where Marvin Minsky enters the picture once again. He identified the loopholes in perceptron that essentially led to the AI winter.

The AI Winter

AI winter was a phase when almost all research was stopped as Marvin Minsky criticized Rosenblatt’s perceptron in his 1969 book titled ‘Perceptron an Introduction to Computational Geometry’. It was quite discouraging for the industry to say the least. Adding on to it was the failure in fulfilling the hyperbolic expectations made by the AI industry as far. this prompted the investors and government bodies to withdraw funding leading to AI winter that lasted from 1974 to the 1980’s. Then in 1986 a cognitive psychologist and computer scientist Jeffrey Hinton came as a savior. He provided a solution to the perceptron problem bringing in a Renaissance in the industry.

Renaissance in 1986

Hinton co-authored a paper titled ‘learning Representations by Back Propagating Errors‘ in which he disproved Minsky’s criticism of the Rosenblatt’s perceptron. He proposed that if a group of perceptrons is combined in a neural system with hidden layers. A perceptron can perform classification under a non-linear function. Any mathematical function can be converged in this manner. As a result the AI industry changed dramatically. A critical flaw in Rosenblatt’s perceptron was suddenly fixed resulting in a boom in AI. Further in 1989, Yan Lee Cun, regarded as the father of convolutional neural networks created a device that could recognize handwritten digits. This device annotated zip codes by looking at the images of written digits and employing a neural network with the back propagation algorithm. This stood as a landmark achievement in computer vision. Later on experimenting with gaming was still carried out to make the machines better in making strategic solution. Chess was yet another game that computers were taught to play, and this was also the first time in 1997 that a computer triumphed over human.

The day a computer won

The Feng-hsiung Hsu a graduate student was working on his dissertation project chip test. He was creating a machine that could play chess later when he started working with IBM researchers. He continued to develop this chess playing game with his associate researcher and the deep blue scientists. To teach the machine various chess moves this team gained the support of U.S chess master Joel Benjamin. Joel assisted the Machine by compiling a list of moves that the machine could use during the day. He also played against the machine so that the team could identify its flaws. However when this machine played its first game against world chess champion Garry Kasparov in 1996. It got defeated by two points. The team went back to the drawing board and worked on improving the machine’s ability and created a new version of Deep Blue that could consider a staggering 200 million options per second. Finally in 1997 IBM’s deep blue computer defeated World chess champion Garry Kasparov.

It was during this time when the importance of data for machine learning was realized by few researchers. While many of the 21st century researchers focused on machine learning models in 2006 a computer scientist from America Fei-Fei Li prioritized working on datasets that could change the way we thought about models. As a result around 2009 she devised the concept of ImageNet.

The ImageNet 2009

ImageNet is a data set of images organized for its use in visual object recognition. Today it offers approximately 15 million labeled images in 22000 categories. This project was inspired by two important needs in computer visions. Firstly the growing demand for high quantity object categorization and secondly the critical need for equipped resources to conduct good research. This initiative by Fei-Fei Li aided many deep learning and neural network researchers by providing them with the labeled image data sets that they so desperately need. This annotation of large amounts of data sets was carried out with the help of crowdsourcing by using Amazon’s mechanical turf. Since then the ImageNet project has held an annual software contest called the ‘ImageNet large-scale visual recognition challenge’. The goal is to develop models that could perform the best on this data set or simply identify objects with the lowest error rate. In 2012 Jeffrey Hinton and his team competed in this ImageNet challenge and reduced the error rate to nearly half to that of the previous year’s competition winners. So, what did they do? Well, they use GPU Hardware with a deep learning algorithm. This resulted in better ImageNet performance. Following this great Victory many researchers began to work in this field. Companies such as Apple, Amazon, and Facebook became more interested in this technology and an ecosystem began to follow.

Hike in the Field

Initially working to create accurate road maps by extracting data from satellites and street views. In 2009 Google started working on self-driving technology after the success of Anthony Levandowski’s self-driving car Prebot which was featured in Discovery Channel’s show ‘Prototype This’. This project was later renamed as Waymo and went on to become a starter in 2016. They were acquired by Google and are now a part of alphabet. The DeepMind company which was acquired by Google in 2014 developed an artificial Go player a machine that defeated world champion Lee Sedol in Go and game that is far more complex than chess. The victory of DeepMind’s Alphago urged many scholars and thinkers to consider the use of artificial intelligence in various Fields. It’s presence and operations on our daily lives made us wonder if machines can really think?

Are Machines Really Thinking?

During this time a filmmaker named Oscar Sharp created a short film named Sunspring that is written by an AI system. The modem that created the screenplay used text prediction technology that we see today in smartphone. Another technology like IBM Watson can be seen as a chatbot in any service provided applications where it acts as a virtual assistant. It is a question answering machine that generates insights from large amounts of unstructured data using natural language processing and machine learning. Now, along with thinking the other question was whether these machines could get creative and guess what that too was attempted when computer scientist Ian Goodfellow introduced GAN (Generative Adversarial Network) in 2014.

It is a learning model that learns and discovers patterns from inputs resulting in a new set of updates. The model can create a new set of artworks by combining two different images. Also the very recent invention developed by open AI could create images with textual descriptions. It is a type of prompt engineering. I’m sure you must have seen at least a couple of images developed by this AI program named DALL.E 2. It is capable of creating images of real as well as fictional object.

The image that you see here is the result of the text prompt ‘An astronaut riding a horse in photorealistic style’. DALLE illustrates how imaginative humans and clever systems can collaborate to create new things there by increasing our creative potential. However, it looks like this is just the beginning because on 29 September 2022 meta just announced their prompt based video generation tool called make-a-video right alongside other similar independent or open projects like synthesis.io and rephrase.ai. The main question here is ‘Is AI really thinking?’ The short answer is no.

The current state of AI is the same as any other use of computation. It does what humans ask it to do and humans need to be very specific about what they ask for. Every good AI model out there is really good at only one thing meaning that the AI that draws your drawing does not order your birthday cake just because your mom forgot to but yes the future is exciting and we would love to be on the ride.

:upscale()/2013/09/23/133/n/1922507/87e433ccf3b57440_benedict-cumberbatch-as-alan-turing.jpg)